Scientific Consistency at General Electric

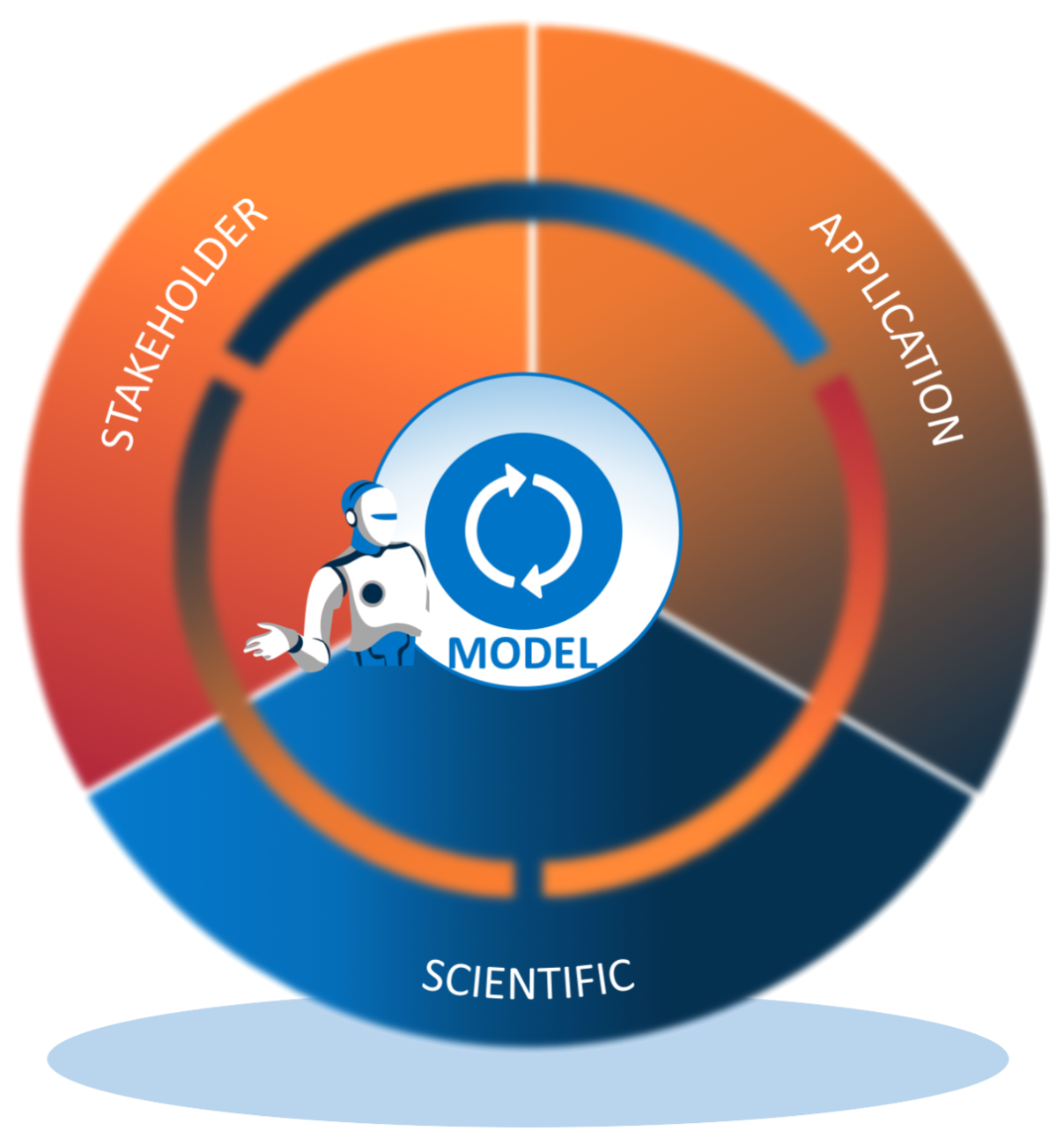

In order for an AI model to solve a real-world problem, it needs to be trained to represent reality. The validated state is what we call scientific consistency. AI project teams achieve this state by comparing the output of a model with a reality surrogate, such as a domain expert or confirmatory evidence—empirical phenomena also known as “ground truth.” Comparing activities are key to achieving scientific consistency; these activities illuminate model mechanics and performance to identify model-solution mismatch, which teams remediate by adjusting data, features, the algorithm, or domain knowledge.

GE’s Corporate Environment, Health, and Safety (EHS) team delivered company-wide governance and oversight for the unit’s area of focus.[foot]B. H. Wixom, I. A. Someh, and C. M. Beath, “GE’s Environment, Health, and Safety Team Creates Value Using Machine Learning,” MIT Sloan CISR Working Paper No. 448, November 2020, https://cisr.mit.edu/publication/MIT_CISRwp448_GEMachineLearning_WixomSomehBeath.[/foot] In 2016, a team of EHS leaders formulated a set of standards it called Life Saving Principles (LSP) that would guide work practices for high-risk operations. To confirm that GE contractors had robust EHS programs in place, GE expanded its contractor onboarding process to include LSP oversight.

The contractor onboarding process was exacting and labor intensive: hundreds of GE EHS professionals vetted the approximately 80,000 contractors the company hired annually. By 2020, GE had developed and implemented an AI-enabled Contractor Document Assessment (CDA) application that served all GE EHS professionals as a bolt-on application for use during the contractor onboarding process. Delivering the tool began to free up EHS professionals to focus more of their expertise on field execution and higher-value EHS work.

At the outset, a team of data scientists, digital/IT professionals, and EHS experts selected a natural language processing algorithm that required EHS experts to develop from scratch a list of words and phrases that the algorithm would draw on for modeling. The chosen technique required a minimum of 600 contractor documents for model training; however, at that time only about 300 documents existed with classification labels, and of those only 230 were in a machine-readable format. Therefore, the team amassed properly digitized documents, and EHS experts read through the documents from start to finish and labeled them with annotations. The experts identified key words and terms that indicated whether LSP requirements were met or not, and why; and they classified each document on whether or not it satisfied the LSP. The team had expected the project to take three months, but building the training data set alone required more than six months’ time.

To facilitate model training and development, developers created a user interface that listed applicable LSP requirements for each document, indicating whether the document passed (displayed in blue) or failed (displayed in red) along with the actual probability (displayed as a percent) of that outcome being accurate. The reviewers then could drill down into the document to inspect it firsthand. When a reviewer disagreed with the machine’s assessment, they could comment regarding evidence the model should have considered and specify where in the document that evidence was located, and override the machine learning decision of satisfied/not satisfied per requirement. The interface provided access to all the review history across evaluators. It displayed a dashboard that monitored what was rejected, what was reviewed, and what the decision was.

Over time, the comparing activities led to model adjustments and retraining, which helped the model become more accurate and eliminate false negative results. Also, through the activities, the team exposed that domain experts sometimes made errors in judgment and that different reviewers sometimes interpreted document text in a slightly different way. With that understanding, the project team developed evaluator feedback and education.