LISTEN TO THE RESEARCH

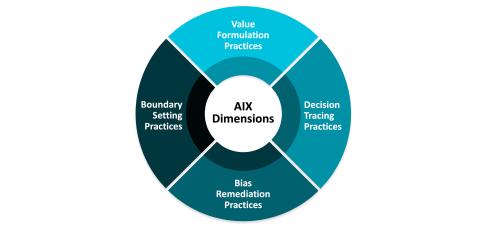

Four characteristics of AI—unproven value, model opacity, model drift, and mindless application—make it challenging to get stakeholders to trust AI solutions. As a result, organizations that strive to become AI-powered adopt practices to produce AI solutions that are trustworthy. Over time, these practices build AI Explanation (AIX) capability: an emerging enterprise capability that arises from practices AI teams use to build stakeholder confidence in AI solutions. In this briefing, we first describe AIX capability and four sets of practices used to build it. We then draw on a case study about the AI journey of Microsoft to illustrate examples of the practices that company has leveraged.