Four groups have been involved in establishing, evolving, and executing the guidelines: The Compliance team evaluates data and analytics efforts to ensure they meet legal requirements and company standards. Members of this team are assigned to projects to circumvent compliance issues. The Futures team evaluates projects regarding customer interests and ethical concerns. Through workshops, focus groups, and surveys, its members actively engage with customers and represent them on projects. The IAG IP and Data Committee—with senior representatives from across all of the company’s business divisions—makes foundational decisions such as how the company may combine data sets and how to achieve clarity regarding customer consent. Finally, the IAG leadership team addresses emergent issues and handles concerns that have been escalated. As each group encounters new issues and achieves resolutions, the framework has been adapted accordingly.

IAG expected that the framework would change as technology progressed and customer expectations shifted. This has indeed been the case—and leaders have discovered that while the legal and ethical lenses have been influential, the customer (social) lens has been dominant in shaping the framework.

Protecting Personal Data

To reinforce IAG’s acceptable data use framework, leaders centralized the management of customer data. The IAG Data Governance Team has coordinated all access to the Hadoop data lake, ensuring that all customer information is governed before the company releases it. The team created a privacy rating process to classify all of the data in the lake according to its personally identifiable information (PII) and sensitivity. Michelle Pinheiro notes, “The information that we have about vehicles (like their make and model) is not as sensitive as our customers’ names [and their] date of birth and gender. Making that distinction is fundamental to governing the data effectively.”

To request data extracts, employees must articulate what data is needed, how it will be used, whether it will be disclosed outside the company, and what the desired benefit is. The Data Governance Team reviews each request and recommends whether and how to move forward. If the data request challenges any of IAG’s ethical data principles, the team will suggest an alternate way to achieve the business objective more safely, or put the project on a data risk register to be reviewed by the Data Governance Council. The team wants to be seen as an enabling rather than disabling function. According to Pinheiro, “The success of our governance processes for data usage hinges upon our role in enabling our colleagues to achieve their goals for using data, not in preventing them from moving forward.”

Privacy and Security leaders also rely on good data management practices to guide employees on acceptable data use. A team of ten dedicated data analysts monitor data quality metrics and remediate them when they drop below tolerable thresholds, thus ensuring accurate data for employees’ analyses. Another group oversees the tagging of data with contextual metadata that might indicate how and when the data was collected, its lifespan, or limitations to its use.

Protecting Person-Centered Analytics

While as important to IAG as acceptable data use, acceptable analytics use has represented more of a grey area. Inferences about a customer such as smoking status and gender—and the creation, storage, management, and sharing of such inferences—are not governmentally regulated. IAG has found it impossible to anticipate all potential analytical use cases, applications, and effects. Chief Analytics Officer Rami Mukhtar advises pragmatism when managing analytics: “You need to have the frameworks and the capabilities to ensure that if issues arise, you can deal with them appropriately. But ultimately, [acceptable use of analytics] is about pushing the boundaries of where analytics can take you with very clear principles in mind.” One principle, for example, is not using machine learning techniques to infer customer identity when you don’t have consent to do so.

Regarding person-centered analytics, IAG has chosen to be conservative: It does not share analytics results outside of the company. The Privacy and Security team classifies analytics similarly to data according to the IAG PII sensitivity scale. And the team reviews how analytics are stored, how IAG’s insights are combined with third parties’, and how insight usage is tested with customers.

Governing Data and Analytics Use by Partners

IAG leaders consider its customers’ data to be the company’s responsibility even once shared with business partners. As such, the company maintains tight control over the data it provides to external parties. IAG reviews partner privacy agreements to learn where and how data will be stored or shared; establishes contractual controls regarding where data can flow and when it needs to be destroyed or removed; and audits its partners’ actions. The team has become adept at identifying non-specific terminology embedded in some partners’ privacy policies, such as terms that allow a partner to use data for unspecified reasons or for an unlimited period of time.

Growing an Acceptable Data Use Culture at IAG

IAG leaders have built acceptable data use capabilities by establishing a framework that it has shaped by a multitude of perspectives. The company is executing this framework through management of its data assets, analytical activities, and partnerships. And as a result, IAG’s culture has changed: employees have become more conscious of the importance of protecting personal data, the risks and benefits of leveraging person-centered analytics, and the value of questioning the stated goals and potential outcomes of projects. Chief Customer Officer Batch remarked, “At IAG, we recognise that data is our lifeblood; insurance is, after all, just a form of predictive analytics. Keeping our customers’ trust and being there when we they need us is core to our purpose. We see access to our customers’ data as a privilege that’s critical to our effective operation, and we are continually ensuring we never take that for granted.”

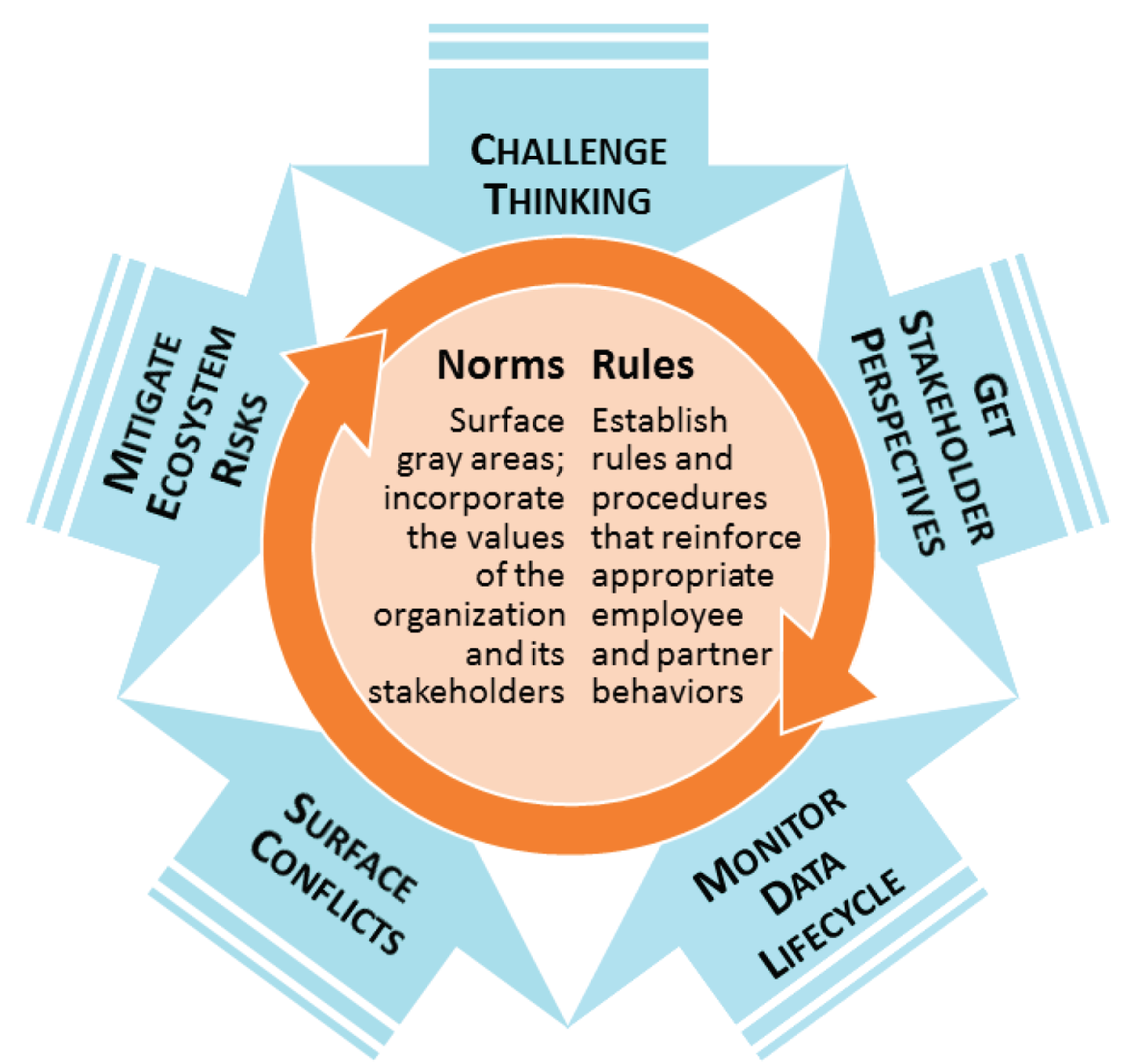

BUILDING NORMS

Management practices that facilitate the development of norms of acceptable data use are supported by five basic principles:

- Challenge current thinking and envision future alternatives to surface value conflicts inside the company. Example practices: devil’s advocacy, ethics review boards

- Incorporate direct and indirect stakeholder perspectives to surface value conflicts and perceptual risks outside the company. Example practices: involving stakeholders in pilots, appointing a stakeholder advocate on project teams

- Monitor and evaluate the way data is being converted into insight and action to understand employee data needs and behaviors. Example practices: usage request and approval processes, digital rights management technology

- Establish shared understanding, consensus, and buy-in regarding conflicts and resolutions to identify desired behaviors. Example practices: oversight boards, privacy impact assessment processes, employee training programs

- Accept responsibility for the data use of your ecosystem partners to surface risks that may result from organizational relationships. Example practices: partner data audits, partner data privacy reviews This is not a time to just write rules; more rules won’t grow a shared understanding of acceptable data use in your company or prepare the company for emergent issues. It is the time to put practices in place to build norms—to guide your employees to appropriate, confident action amidst transformation.